In our previous articles, we discussed the different types of AI evals and explored AI evaluation frameworks. In this article, we will dive into Designing & Implementing AI Evals in Practice.

Evaluating AI models isn’t just about metrics — it’s about creating repeatable, reliable processes that ensure your AI delivers real product value. Here, we’ll explore how PMs can systematically design and implement AI evals, leveraging frameworks, workflows, and tooling to measure and improve model quality effectively.

1️⃣ Define Evaluation Objectives

Before selecting metrics or tools, clarify why you are evaluating:

Business Goals: Reduce support tickets, improve search relevance, increase engagement.

Model Goals: Accuracy, robustness, factuality, fairness, or efficiency.

User Goals: Helpfulness, clarity, safety, accessibility.

PM Tip: Link every metric to a product outcome — this prevents focusing on purely technical metrics that don’t impact users.

2️⃣ Choose the Right Eval Framework

Frameworks guide what to measure and how to structure evaluations. Examples include:

Framework | Focus | Use Case |

|---|---|---|

R-F-R-G-E-S-R-L | Systematic evaluation of LLMs | Rubric → Failure Mode → Robustness → Grounding → End-to-End → Safety → Regression → LLM-as-Judge |

Reference-Based vs Reference-Free | Decide if you have ground truth or need human judgment | BLEU, ROUGE (reference-based) vs Human Evaluation, LLM-as-Judge (reference-free) |

Task-Specific Evaluation | Tailor to model’s application | Classification, Regression, Ranking, Generative, Embedding, Business Metrics |

PM Tip: Combining frameworks ensures both technical rigor and real-world product alignment.

3️⃣ Select Metrics

Metrics must reflect what success looks like for your product.

Classification / Regression: Accuracy, MAE, F1, R²

Ranking / Retrieval: NDCG, Precision@K, MRR

Generative / LLM: BLEU, ROUGE, Faithfulness, Human/LLM judgment

Embedding / Similarity: Cosine similarity, human validation

Business Metrics: Task success rate, CTR, reduction in manual work

PM Tip: Always combine quantitative metrics (e.g., F1) with qualitative evaluation (human or LLM review) to catch subtle errors or subjective failures.

4️⃣ Design Evaluation Workflows

Structured workflows ensure consistency and repeatability:

Data Collection: Curate representative datasets (both typical and edge cases).

Annotation / Ground Truth: Label data or define success criteria.

Automated Testing: Run batch evals using scripts or LLMs-as-judges.

Human Review: Spot-check outputs for quality, fairness, and safety.

Segmentation & Analysis: Evaluate by user segment, failure mode, or edge-case scenario.

Reporting & Iteration: Summarize findings for product and engineering teams, plan improvements.

PM Tip: Build evals into the development lifecycle so model quality is continuously monitored, not a one-off activity.

5️⃣ Tooling for AI Evals

Several tools and platforms help automate or scale evaluations:

Tool / Platform | Use Case | Notes |

|---|---|---|

LangChain Eval | Structured LLM evaluation | Supports reference-based and reference-free scoring |

OpenAI Evals | Benchmark models systematically | Provides templates for human-in-the-loop or automated evals |

Weights & Biases / MLflow | Experiment tracking + metrics logging | Tracks performance over time, enables regression checks |

Custom LLM-as-Judge Pipelines | Scale human review | Automates scoring using a stronger LLM with defined rubric |

PM Tip: Evaluate both tooling capabilities and integration ease — good tooling ensures repeatable, scalable evals.

6️⃣ Integrate Continuous Evaluation

AI model quality is not static. Implement continuous evaluation and regression testing:

Re-run evals on every model version or prompt update.

Track key metrics over time, including safety, fairness, and user impact.

Trigger alerts when performance drops or failure modes emerge.

PM Tip: Continuous evals give confidence for safe, incremental product launches.

7️⃣ Close the Loop to Product Decisions

Finally, tie evaluation results to actionable product insights:

Identify critical failure modes and prioritize fixes by user impact.

Inform UX or workflow changes based on observed AI behavior.

Adjust thresholds, prompts, or retrieval pipelines to improve outputs.

PM Tip: AI evaluation is a product lever, not just a technical checkpoint — use insights to drive better user outcomes and business value.

Summary

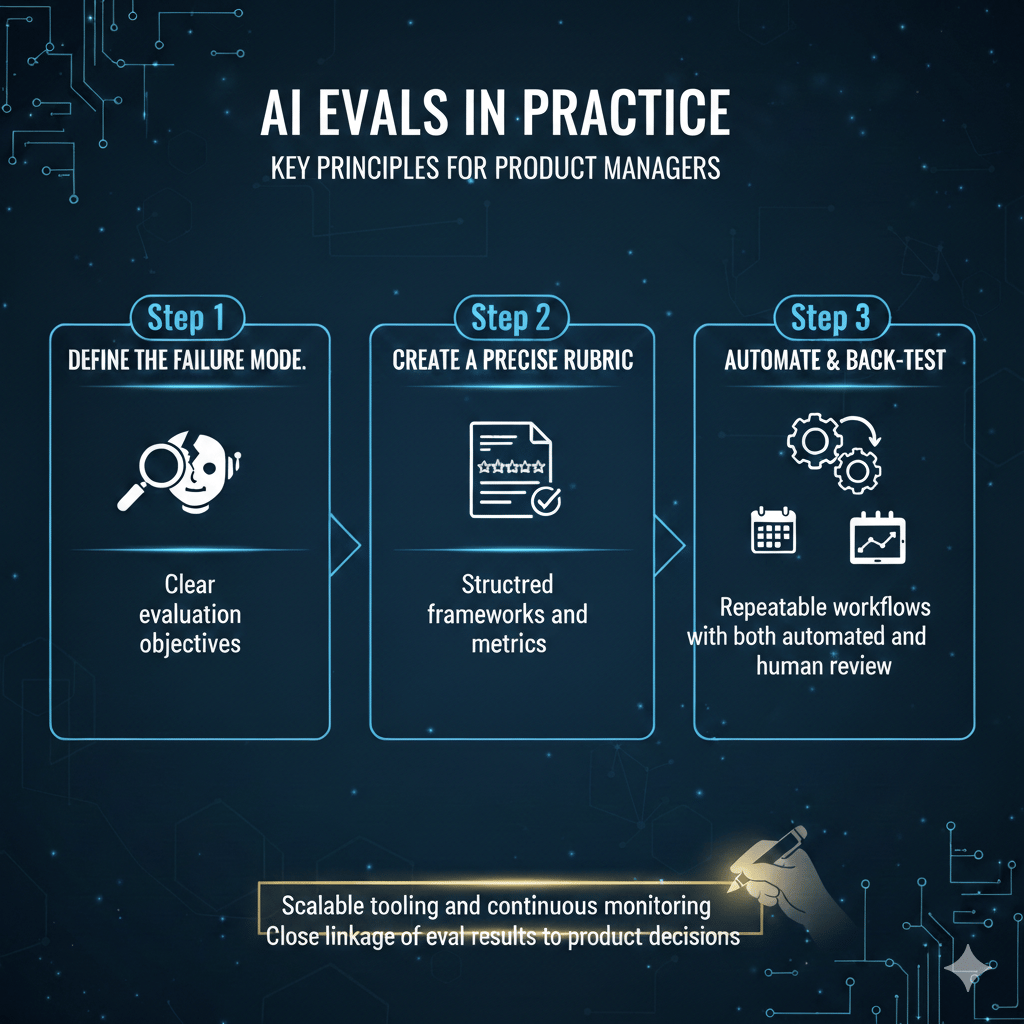

Designing and implementing AI evals requires:

Clear evaluation objectives

Structured frameworks and metrics

Repeatable workflows with both automated and human review

Scalable tooling and continuous monitoring

Close linkage of eval results to product decisions

With these steps, PMs can ensure models are high-quality, safe, and aligned with user and business goals, turning AI evaluation into a strategic advantage.