💸 The Expensive (~$250) Mistake Most AI Teams Don’t Notice

Every time a user asks:

“What are your hours?”

…and your system routes that query to GPT-4o, you’re overspending by ~17×.

Now scale that mistake:

100,000 queries/month

Majority are repetitive, factual, or easily classifiable

Each one unnecessarily hitting a large LLM

That’s ~$250 to 650/month burned on questions a cached lookup could answer in under 50 milliseconds for free.

This isn’t a prompting problem. This is a routing problem.

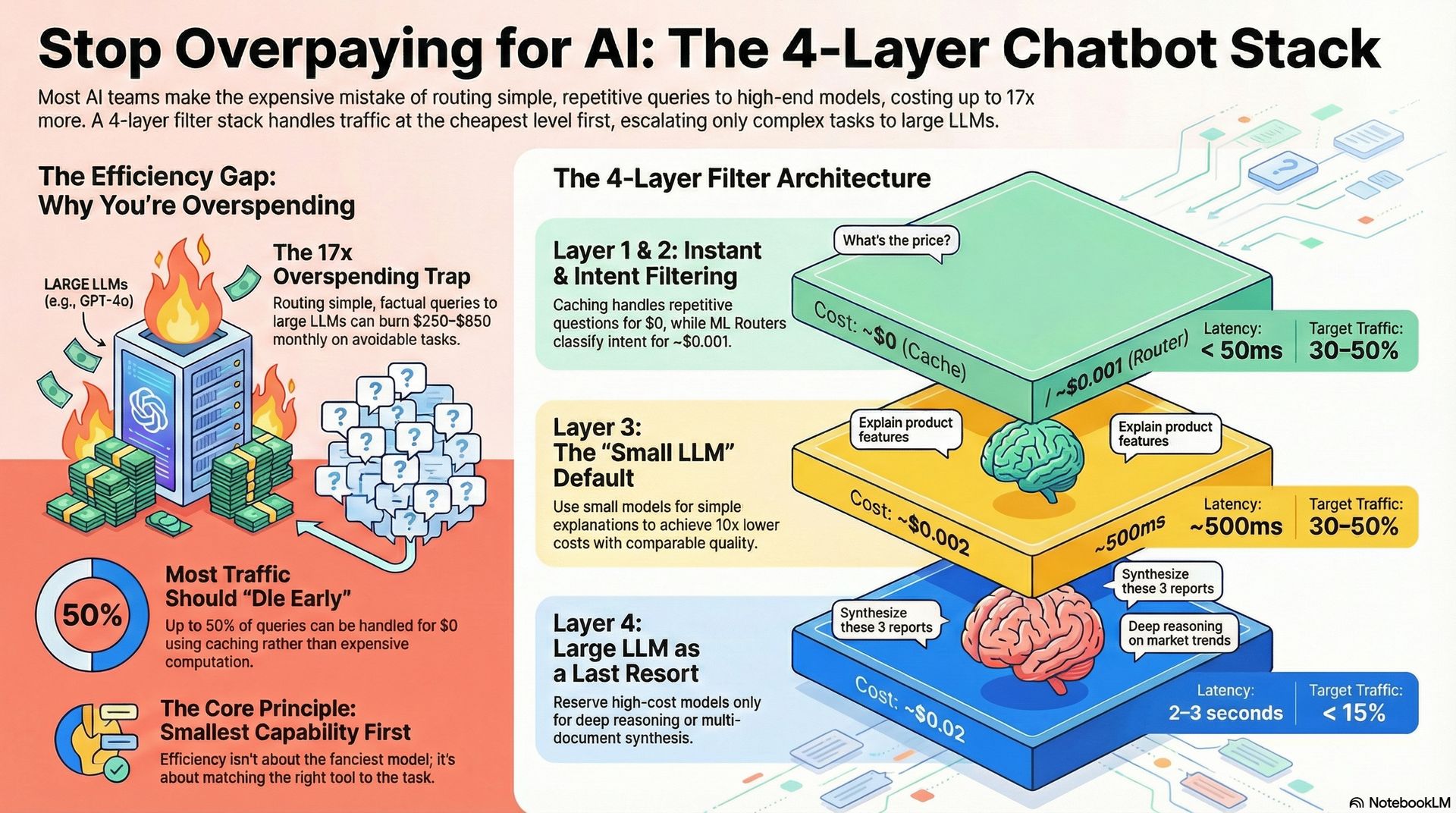

The 4-Layer Chatbot Stack (Think Filters, Not Models)

Your chatbot should behave like a series of filters. Each layer attempts to handle the query and only escalates to LLM layer if it can not handle the first two layers, which are cache and ML Router.

Personally, I always do the cost estimation based on the data and try to find which one is more affordable without compromising the answer quality. And, always route to small LLM or big LLM depending on the problem. It’s crucial to implement cache layer because most of the questions that user asks are repetitive and we don’t even need to call LLM for these. Hence, I can definitely say that “Most traffic should die early, cheaply”.

Core Principle

Use the smallest capability that works

A cached response beats ML classification. ML beats small LLMs. Small LLMs beat large ones. Smart routing isn't about having the fanciest model—it's about matching the right tool to the task.

Now, let’s try to understand this 4-layer chatbot stack in more detail.

Image generated using chatgpt

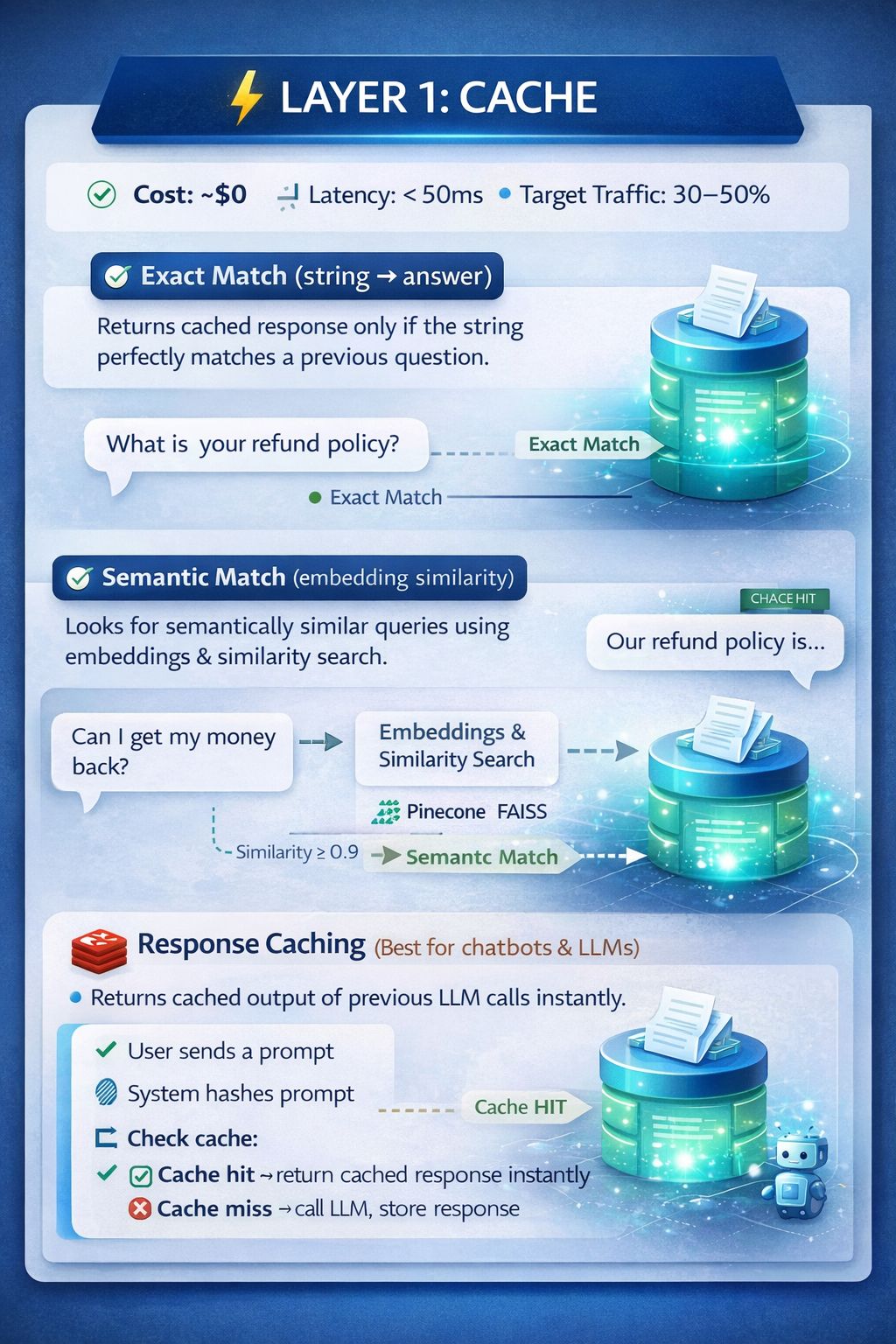

Layer 1: Cache

Cost: ~$0 Latency: < 50ms Target Traffic: 30–50%

What it does:

Exact match (string → answer) - In this it will always try to answer the questions that has matches exactly with the previously asked questions. It will answer only if the string matches, it doesn’t look for the semantic match.

Semantic match (embedding similarity) - It cached the response based on the meaning not the exact text. This uses embeddings and similarity search. We can use FAISS/Pinecone to implement semantic caching.

How it works?

For example, previously some user has asked this question: “Is this the exact same prompt?”

But you ask this question: “Is this basically the same question?”

Now, in semantic match you will use embeddings and similarity search. Embeddings are the vector representation of the words. And semantic similarity is the distance between those words, means how close these words are.

Convert the prompt into an embedding vector

Search cached prompts using cosine similarity

If similarity ≥ threshold (e.g. 0.90):

Reuse the cached response

Otherwise:

Call the LLM

Store prompt + response + embedding

Response Caching (caching the output of the LLM call) - This is the one which I always implement while working with AI Agents. In this, if the same prompts hits your system again, you do not call LLM at all, infact you just return the cached response. We can use redis to implement response caching.

How it works?

User sends a prompt

System hashes or fingerprints the prompt

Check cache:

✅ Cache hit → return stored response instantly

❌ Cache miss → call LLM, store response, return it

LLM providers also support response caching as they have realized that the many prompts are repeated, recomputing them is not useful, and cached tokens are already computed. Some providers are even providing the discount on this.

Image generated using chatgpt

Layer 2: ML Router

Cost: ~$0.001 Latency: ~100ms

We generally use this layer where we can implement the classification problem such as intent detection, entity extraction and semantic detection. If our model confidently provides the answer with the accuracy greater then 90%, then, we can directly provide the answer without any LLM call, otherwise we have to call LLM if the accuracy is lower.

It is best for the returns, order status, account actions and structured workflows.

Layer 3: Small LLM

Cost: ~$0.002 Latency: ~500ms

At the start of any project, it is essential to create a representative sample set (a golden dataset) and validate model outputs using both a small LLM and a large LLM. This comparative evaluation helps determine whether the smaller model can meet quality requirements.

If the responses from the small LLM are comparable in accuracy and usefulness, it should be preferred over the larger model. Cost estimation should always be performed across the full dataset before finalizing the model choice.

In one of my projects, using GPT-4o Mini resulted in a cost that was nearly 10× lower than GPT-4o, while producing answers of comparable quality.

Small LLMs typically perform well for tasks such as simple explanations, light comparisons, and straightforward content generation. As a best practice, a small LLM should be the default choice, with larger models reserved only for cases that require deeper reasoning or complex synthesis.

Layer 4: Large LLM

Cost: ~$0.02 Latency: 2–3 seconds

What it does

Large LLMs are designed for tasks that require deeper cognitive capabilities, including:

Multi-step and complex reasoning

Personalized responses that incorporate user context and preferences

Synthesis across multiple documents, tools, or knowledge sources

Retrieval-Augmented Generation (RAG) with long-context inputs

Reality check

Large LLMs are approximately 17× more expensive than small LLMs

They should be used sparingly and intentionally, only when the task genuinely requires advanced reasoning or cross-context synthesis

As a best practice, large LLMs should not be the default choice. Instead, they should be positioned as an escalation layer, invoked only after simpler and more cost-efficient options have been evaluated and ruled out.

Routing Decision Tree (This Should Be in Your Docs)

Now, let’s look at all the metrics that are important in each layer:

Metric | What It Measures | Why It Matters | Good Target Range | Tied To Which Layer |

|---|---|---|---|---|

Cost per Query | Average end-to-end cost for handling one user query | Directly impacts unit economics and scalability | <$0.005 avg | All layers |

Cache Hit Rate | % of queries served from response / semantic cache | Biggest lever for cost + latency reduction | 40–60% | Layer 1 (Cache) |

ML Intent Accuracy | Confidence-weighted correctness of intent classification | Determines whether queries avoid LLMs entirely | ≥90% | Layer 2 (ML Router) |

Small LLM Traffic Share | % of queries handled by small LLM | Indicates efficient model utilization | 30–50% | Layer 3 (Small LLM) |

Large LLM Traffic Share | % of queries escalated to large LLM | Signals how often deep reasoning is required | <10–15% | Layer 4 (Large LLM) |

Cache Read Latency | Time to retrieve data from cached responses | Determines perceived “instant” responses | <50ms | Layer 1 (Cache) |

Fallback Rate | % of queries that bypass routing and go straight to large LLM | Indicates routing failures or misclassification | <5% | Router + LLM layers |

The transition from a single LLM-dependent system to a 4-layer chatbot stack is not just a technical upgrade; it is a financial necessity for any team scaling AI. By treating your chatbot as a series of filters, you ensure that your architecture follows the core principle of efficiency engineering: "Most traffic should die early and cheaply".

As you implement this stack, keep the following "Golden Rule" in mind: Use the smallest capability that works. A system that routes a simple "What are your hours?" query to a large LLM is failing at a routing level, not a prompting level. Your goal should be to maximize your Cache Hit Rate (targeting 40–60%) and utilize your ML Router to handle structured workflows like order status or account actions.

By the time a query reaches your large LLM layer, it should be because the task genuinely requires multi-step reasoning, personalized context, or complex synthesis. If you can keep your Large LLM traffic share below 10–15%, you have successfully built a system that is both intelligent and economically sustainable. In the world of AI development, the smartest routing isn't about having the fanciest model—it’s about matching the right tool to the right task.