In this article, we’ll explore Retrieval-Augmented Generation (RAG)—one of the most widely adopted and impactful AI technologies in modern applications today. From customer support assistants to enterprise knowledge systems, a large number of real-world AI products are built on top of RAG.

Because of its widespread adoption, understanding how RAG works is no longer optional—especially for product managers, AI practitioners, and technology leaders involved in building or evaluating AI-powered systems.

This article is designed to give you a clear, end-to-end understanding of RAG, starting from the basic RAG workflow and gradually progressing to advanced RAG architectures used in production systems. By the end, you’ll understand not just what RAG is, but why different RAG techniques exist and when to use them.

Why RAG Exists (The Problem It Solves)

Large Language Models (LLMs) like GPT are powerful, but they have three fundamental limitations:

They don’t know your private data (internal docs, PDFs, databases).

Their knowledge is frozen at training time.

They hallucinate when confident answers are missing.

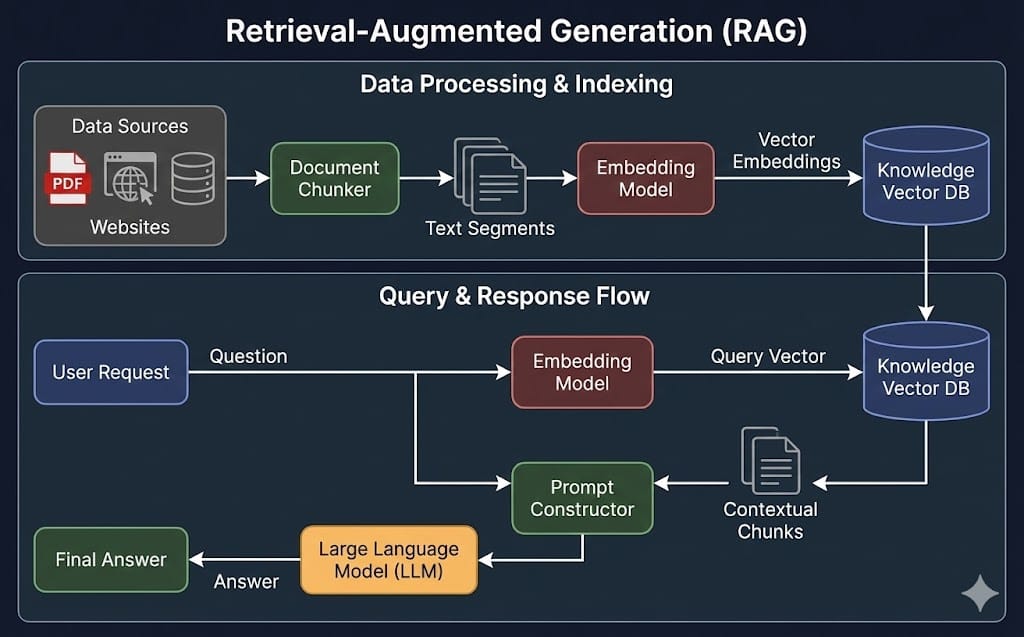

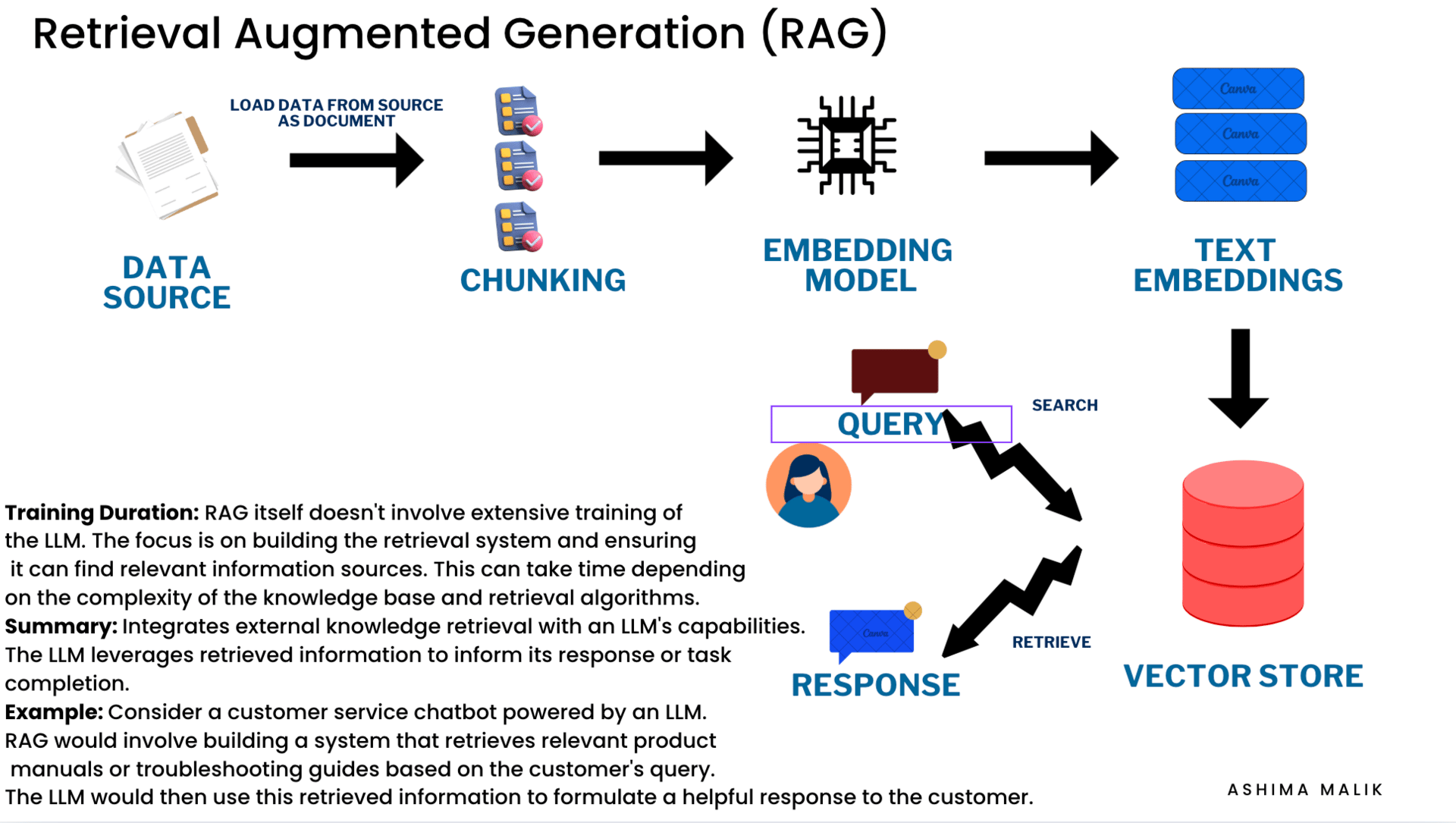

Retrieval-Augmented Generation (RAG) solves this by combining search + generation:

Instead of asking the LLM to answer from memory, we first retrieve relevant knowledge and then ask the LLM to generate an answer grounded in that knowledge.

Before diving into advanced RAG architectures, it’s important to understand the core building blocks that power every Retrieval-Augmented Generation system.

1. Knowledge Source

What it means:

The place where your system’s information lives.

Examples:

PDFs (policies, manuals, research papers)

Docs & Wikis (Notion, Confluence)

Support tickets

Databases

APIs

Two types:

Structured data: Tables, SQL rows, schemas (easy to query)

Unstructured data: Text documents, emails, PDFs (harder for machines)

📌 Why this matters:

RAG allows LLMs to answer questions using your own data, not just what they learned during training.

2. Chunking

What it means:

Breaking large documents into small, manageable pieces called chunks.

Why chunking is needed:

LLMs cannot read very large documents at once

Smaller chunks improve search accuracy

Typical chunk size:

300–1,000 tokens per chunk

Basically understand this as, instead of searching an entire book, you search relevant paragraphs.

3. Embeddings

What it means:

Embeddings convert text into numbers that represent meaning.

Text → Vector (list of numbers)

Similar meanings → similar vectors

Why embeddings matter:

Enable semantic search, not keyword search. Semantic search means where the system retrieves results based on the meaning and intent of a query, not just exact keyword matches.

Common embedding models examples:

OpenAI embeddings

Sentence Transformers

4. Vector Databases (FAISS, Chroma, Pinecone, etc.)

What they do:

Store embeddings and allow fast similarity search.

Common Vector Databases

FAISS

Open-source library by Meta

Runs locally

Great for experimentation

Chroma

Developer-friendly, lightweight

Popular for RAG prototypes

Pinecone

Fully managed, cloud-based

Scales well for production systems

Weaviate

Vector DB with built-in schemas and filtering

📌 Vector databases let you quickly find text that means something similar, not just exact word matches.

5. Retriever

What it means:

The component responsible for finding relevant chunks from your data.

How it works:

User asks a question

Question is converted into an embedding

Retriever searches the vector database

Returns top-k most relevant chunks

Common retrieval methods:

Cosine similarity- means how similar two texts are by comparing angle between their vector representation (vector means convert text into mathematical form)

Approximate Nearest Neighbor (ANN)- A fast search technique that quickly finds vectors that are close enough to a query vector without checking every possible vector

The retriever decides what information the LLM sees—making it one of the most critical quality levers in RAG.

6. Context Window

What it means:

The maximum amount of text an LLM can read in one request.

Why it matters:

Retrieved chunks must fit inside this limit

Too much text → truncation

Too little text → missing information

It’s like the size of a whiteboard the LLM can look at before answering.

7. Grounded Generation

What it means:

The LLM generates answers based on retrieved documents, not imagination.

Why this is critical:

Reduces hallucinations

Improves factual accuracy

Enables citations and traceability

📌 Key difference:

❌ Ungrounded LLM → guesses

✅ RAG-powered LLM → answers with evidence

Now, you have the complete understanding of RAG terminologies, now lets understand how the simple RAG works.

A. Simple / Naive RAG (Baseline)

Steps:

User asks a question

Convert question → embedding

Retrieve top-k similar chunks

Pass chunks + question to LLM

LLM generates answer

Why It’s Called “Naive”

No query rewriting

No ranking refinement

No context awareness

When to Use

MVPs

Internal tools

Small knowledge bases

B. Basic Unstructured RAG

A RAG system built only on unstructured data (PDFs, docs, text files). The key Characteristics are - no schema, no relationships, pure semantic search.

This has the RAG struggles with- Multi-step questions, Large documents and Context spanning multiple sections.

C. Parent Document Retriever

While chunking large documents into smaller pieces improves retrieval accuracy, these small chunks often lose the broader context, leading to incomplete or misleading answers.

How it works:

Documents are split into small child chunks for precise retrieval.

Each child chunk is linked to a larger parent document.

During retrieval, the system first finds the most relevant child chunks.

Instead of sending only those small chunks to the LLM, it retrieves and sends the associated parent document, providing richer context.

This approach ensures the LLM receives enough surrounding information to generate more coherent, accurate, and context-aware responses.

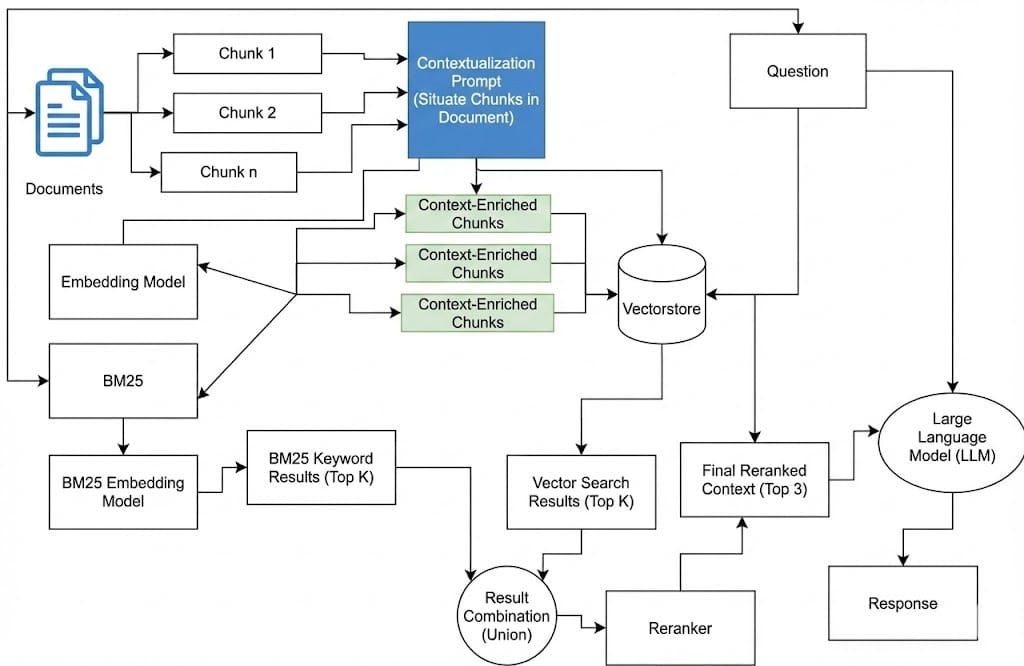

D. Contextual RAG

A single question can mean different things depending on who is asking and in what situation. Basic RAG retrieves documents purely based on the query text, while Contextual RAG retrieves information that matches the user’s intent and context.

What “Context” Can Include

User role (product manager, engineer, customer)

Conversation history

Previous queries or actions

Product state or workspace

Organizational or access-level constraints

Contextual RAG Workflow

User Query – The user asks a question.

Context Collection – The system gathers relevant context (user role, session history, metadata).

Context-Enriched Query – The original query is augmented or filtered using this context.

Context-Aware Retrieval – The retriever searches the knowledge base using the enriched query.

Relevant Context Selection – The most relevant documents or chunks are selected.

Grounded Generation – The LLM generates a response using both the retrieved documents and user context.

Why It’s Valuable

Improves relevance and precision

Reduces generic or misleading answers

Enables personalized and role-aware responses

Enhances user experience in SaaS and enterprise products

E. RAG Fusion

RAG Fusion is an advanced Retrieval-Augmented Generation (RAG) technique that goes beyond simple single-query retrieval. Instead of retrieving information only once from a knowledge base, RAG Fusion:

✅ Generates multiple queries from the original user input

✅ Performs retrieval for each query

✅ Uses a fusion/ranking algorithm to combine and reorder results

✅ Provides the LLM with a richer, more relevant context before generating an answer

How RAG Fusion Works?

User Query

↓

LLM Sub-Query Generator

↓

┌────────────────────────────────────────┐

│ Generated Sub-Queries (Q1, Q2, Q3...) │

└────────────────────────────────────────┘

↓

Vector Search for Each Sub-Query

↓

Multiple Retrieved Lists

↓

Reciprocal Rank Fusion (RRF)

↓

Fused & Re-Ranked Top Documents

↓

LLM + Unified Context

↓

Final Answer

User Query Received

The system receives a natural language question from the user.Generate Sub-Queries

An LLM takes the original query and creates several related sub-queries that capture different semantic perspectives (e.g., definitions, subtopics, related concepts).Embed All Queries

Each query and sub-query is converted into embedding vectors (semantic representations).Multiple Retrievals

Each embedding is used to retrieve relevant chunks from the vector database independently.Rank & Fuse Results

A fusion algorithm such as Reciprocal Rank Fusion (RRF) combines the retrieved document lists from each sub-query and re-ranks them based on consensus relevance across queries.Build Unified Context

The top-ranked chunks (after fusion) are assembled into a comprehensive context.Grounded Generation

The LLM generates the final answer using the fused context — reducing hallucinations and improving accuracy.

Note: Reciprocal Rank Fusion (RRF) gives higher weight to documents that are near the top across multiple retrieval passes — effectively prioritizing consistently relevant sources.

Now, let’s understand the RRF in little more detail.

Suppose, this is the user question: “How does AI agent memory work?” Now, The system generates two sub-queries:

Query 1: “AI agent memory mechanisms”

Query 2: “types of memory in AI agents”

Each query retrieves its own ranked documents. For eg.

Results from Query 1

Doc A

Doc B

Doc C

Results from Query 2

Doc B

Doc A

Doc D

Now, RRF assigns a score to each document using this idea: Higher-ranked documents get higher scores, and documents that appear in multiple lists get boosted.

Document | Rank in Q1 | Rank in Q2 | Why It Scores Well |

|---|---|---|---|

Doc A | 1 | 2 | Appears near top in both |

Doc B | 2 | 1 | Appears near top in both |

Doc C | 3 | – | Appears only once |

Doc D | – | 3 | Appears only once |

Final Fused Ranking (After RRF)

Doc B (ranked high in both lists)

Doc A (ranked high in both lists)

Doc C

Doc D

Why RAG Fusion Matters

Standard RAG assumes one retrieval pass is enough — but real world questions are often ambiguous or multifaceted. RAG Fusion overcomes this limitation by:

Expanding search coverage: Multiple queries explore different angles of the question.

Improving relevance: Combining results from several retrievals surfaces documents that are consistently relevant across queries.

Reducing bias: It avoids over-reliance on a single retrieval outcome.

Enhancing factual richness: More diverse retrieval results lead to deeper context and more accurate answers.

F. Hybrid RAG

Hybrid RAG is a retrieval strategy that combines multiple search methods — usually keyword search (traditional) and semantic search (vector/embeddings) — in a unified way before passing retrieved context to the LLM for generation.

Traditional RAG relies mainly on semantic similarity via embeddings. But each retrieval method has strengths and weaknesses:

Keyword search (e.g., BM25):

Finds documents that contain the same terms as the query — excellent for exact matches and domain-specific terminology.Semantic search (vector/embeddings):

Finds documents that mean the same thing as the query, even if they don’t share exact words — excellent for paraphrased or conceptual matches.

Hybrid RAG combines both to get the best of both worlds, improving recall and precision in retrieval.

Hybrid RAG Workflow

User Query

↓

+---------------------+

| Dual Retrieval |

+---------------------+

↓ ↓

Keyword Search Semantic Search

(BM25) (Embeddings)

↓ ↓

Results Combined (Dedup + Re-rank)

↓

Filter & Select Top Documents

↓

LLM Prompt with Context

↓

Grounded Answer

User Query:

The user asks a question in natural language.Keyword Search (BM25 or Inverted Index):

Finds documents where query words or phrases appear exactly — powerful when domain terminology matters.Semantic Search (Embeddings + Vector DB):

Finds documents whose meaning aligns with the query even without shared words.Merge Results:

Combine results from both retrieval methods.

Deduplicate and re-rank based on relevance scores or hybrid ranking strategy.Top Context Selection:

Choose the best documents up to the context window limit of the LLM.Grounded LLM Generation:

Pass the selected context and the user query to the LLM to generate a fact-based response.

Hybrid RAG pulls from both and merges them intelligently so the final context is more complete and representative. Now, lets understand what is BM25 in more detail.

BM25 (Best Matching 25) is a traditional keyword-based search algorithm used to rank documents based on how well they match a user’s query.

Unlike semantic search, BM25 does not understand meaning — it ranks documents using:

How often query words appear

How important those words are across the document collection

Document length (to avoid bias toward long documents)

BM25 scores documents using three key ideas:

Term Frequency (TF)

A document is more relevant if it contains the query word more often.Inverse Document Frequency (IDF)

Rare words are more important than common ones.“refund” is more informative than “policy”.

Length Normalization

Shorter documents are preferred over very long ones with diluted relevance.

Aspect | BM25 | Semantic Search |

|---|---|---|

Match Type | Exact words | Meaning |

Handles Synonyms | ❌ No | ✅ Yes |

Understands Context | ❌ No | ✅ Yes |

Speed | Very fast | Slower |

G. HyDE RAG

HyDE stands for Hypothetical Document Embeddings.

Instead of sending the raw user query directly to the retriever, HyDE first asks the LLM to generate a hypothetical answer (a pseudo-document) and then uses that generated text for retrieval.

This approach boosts retrieval quality without training any new models — it uses the LLM’s own reasoning to craft a richer semantic representation of the user intent.

How HyDE RAG Works?

1. User Query (user submits a question)

↓

2. Hypothetical Answer Generation (LLM)

↓

3. Hypothetical Answer → Embeddings

↓

4. Vector DB Search (top-k retrieval)

↓

5. Retrieved Documents

↓

6. Context Assembly (top documents + query)

↓

7. Final LLM Generation (grounded answer)

Why HyDE RAG Works

Standard RAG embeds the user’s question to search the knowledge base. But:

Short queries may lack enough context for precise retrieval

Users often phrase questions vaguely

Complex intent isn’t expressed in a few words

By generating a hypothetical answer, we create a longer, more informative text that exposes concepts the retriever can match more effectively.

When to Use HyDE RAG

1. User prompts are short or ambiguous

2. Knowledge base contains dense or complex information

3. Retriever quality needs a boost without retraining embeddings

4. You want better recall without much architectural change

H. Adaptive RAG

Adaptive RAG is an intelligent retrieval mechanism that dynamically adjusts how many documents are retrieved and used based on the specific needs of each query rather than using a fixed number of retrieved chunks for all queries.

Traditional RAG pipelines typically:

Retrieve a fixed number of documents (e.g., top 5 or top 10)

Always concatenate them before generation

But not all queries are equal.

Adaptive RAG dynamically decides:

How many retrieved chunks are really necessary

How much context to feed into the LLM

When to cut off retrieval to avoid noise

This improves both efficiency and answer quality.

How Adaptive RAG Works?

1. User Query

↓

2. Initial Retrieval (small top-k)

↓

3. Relevance Evaluation Loop

↙ ↘

↑ Need more? ↓ Enough?

Retrieval expands Stop

(e.g., next K docs) ↓

↓

4. Final Context Assembly

↓

5. LLM Grounded Generation

1. User Query

The user asks a question. Example: “How does incremental agent memory update work?”

2. Initial Retrieval

Start with a small number of retrieved chunks (e.g., top 3).

3. Relevance Evaluation Loop

Adaptive logic evaluates:

Are the current retrieved chunks sufficient?

Does the LLM need more context?

Are additional chunks introducing conflicting or redundant info?

This evaluation can use:

A secondary scoring model

A heuristic threshold

LLM signal evaluation (e.g., confidence, overlap)

If not enough context is found, retrieval expands.

This loop continues until:

Sufficient context is reached

orA maximum retrieval cap is hit

4. Final Context Assembly

All selected documents are merged, deduplicated, and ranked.

5. LLM Grounded Generation

The LLM generates the final answer using the assembled context.

Why Adaptive RAG Improves Quality

Issue in Standard RAG | Adaptive RAG Fix |

|---|---|

Fixed context size works for some queries but not all | Dynamically adapts to query complexity |

Too many retrieved documents → irrelevant snippets | Limits retrieval when not helpful |

Too little retrieved context → hallucinations | Expands only when needed |

Wasted tokens → higher cost | Efficient token usage |

When to Use Adaptive RAG

1. Systems with widely varied queries

2. Cost-sensitive deployments

3.Large knowledge bases where full retrieval is expensive

4. Scenarios where answer quality depends heavily on context depth

I. ReAct RAG

ReAct RAG (Reason + Act with Retrieval) is an agentic RAG approach where the model alternates between reasoning and taking actions (such as retrieval or tool calls) instead of doing retrieval only once at the start.

It combines:

ReAct → Reasoning + Action

RAG → Retrieval-Augmented Generation

This allows the agent to think step-by-step, decide when it needs more information, retrieve it, and then continue reasoning.

ReAct RAG Workflow

User Question

↓

LLM Reasoning Step (Thought)

↓

Decide Action → Retrieve?

↓

Retriever Fetches Documents

↓

Observation (Retrieved Content)

↓

Next Reasoning Step

↓

Repeat (if needed)

↓

Final Answer

User Question: “What are the trade-offs between FAISS and Pinecone for enterprise RAG?”

Step 1: Reason

“I need to compare open-source vs managed vector databases.”

Step 2: Act (Retrieve)

Query knowledge base for:

FAISS features

Pinecone features

Enterprise considerations

Step 3: Observe

Retrieved documents explain scalability, deployment, cost, and ops trade-offs.

Step 4: Reason Again

“I now have enough information to compare.”

Step 5: Generate Answer

Final response is grounded, structured, and complete.

When to Use ReAct RAG

1. Multi-hop reasoning

2. Research assistants

3. Enterprise agents with tools

4. Decision-making workflows

5. Questions where required info isn’t obvious upfront

This article has comprehensively covered the core concepts of Retrieval-Augmented Generation (RAG), along with the most important and advanced RAG methodologies used in modern AI systems.