If AI product sense interviews feel confusing despite learning multiple frameworks, this article is for you. I’ve distilled everything into one simple framework that helps you approach and answer any AI product sense question confidently.

Remember the “Design a Fridge” interview question? It used to be everywhere—but you almost never see it now.

In 2026, top-tier companies — Google, OpenAI, Meta — aren't testing your ability to sketch a feature. They're testing whether you can think like a strategist in an AI-native world.

These are the kinds of real-world questions you’re likely to see in interviews today:

"How would you integrate AI into YouTube to revolutionize shopping?" "How should Google Cloud defend against Microsoft's free AI tier?"

These aren't product design questions. These are business strategy questions dressed as product questions. And most candidates fail these questions because they answer the wrong one.

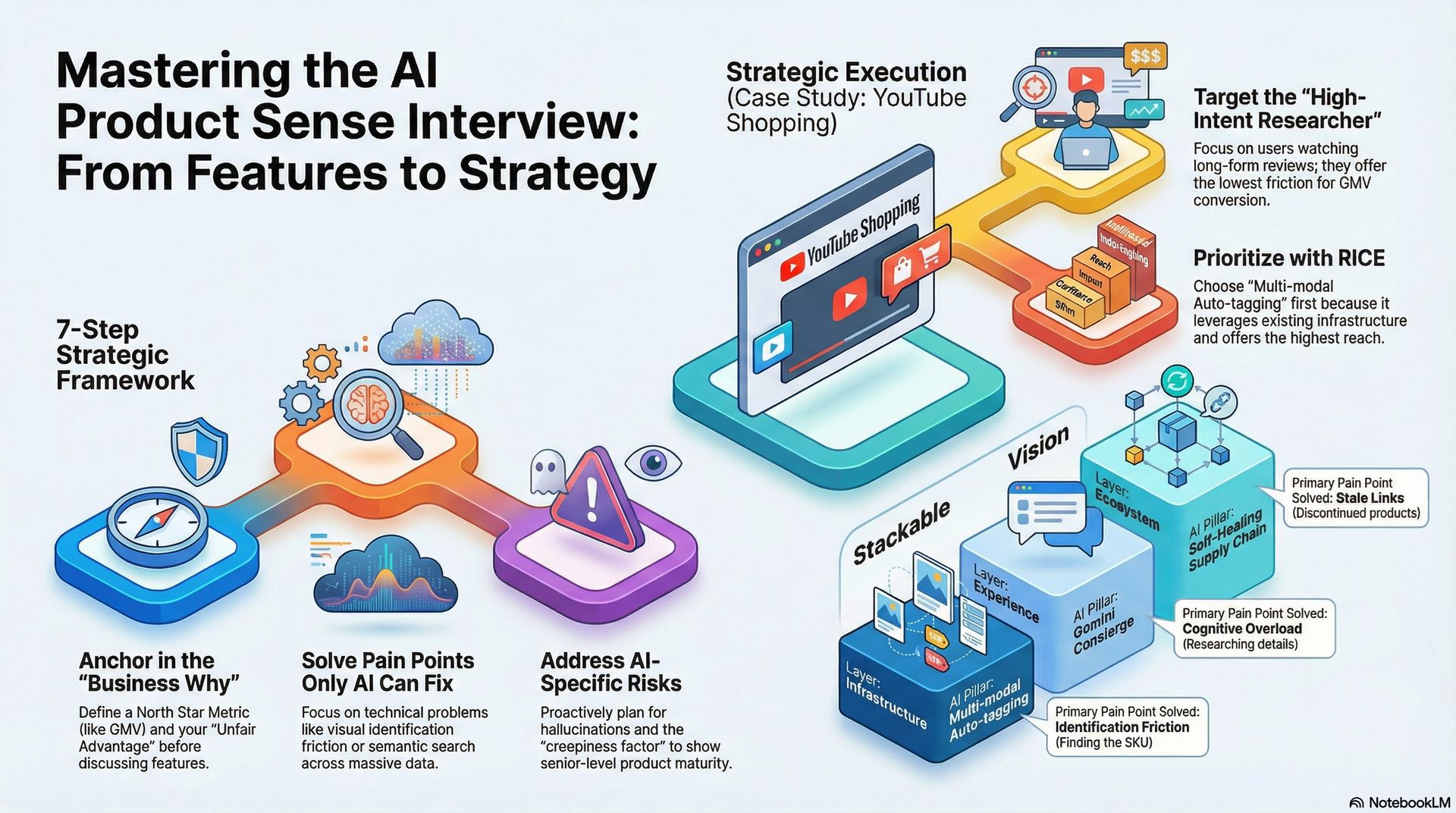

After going through numerous frameworks, this is the simple framework I arrived at. It is a 7-step framework that can help you to answer any product sense question confidently.

The Framework at a Glance

Step 1 → Strategic Objective (Why does this matter to the business?)

Step 2 → User Segmentation (Who exactly are we solving for?)

Step 3 → Pain Points (What problems only AI can fix?)

Step 4 → AI Solution Pillars (What are your 3 bets?)

Step 5 → Prioritization (Which bet wins, and why?)

Step 6 → Risk & Mitigation (What can go wrong with AI specifically?)

Step 7 → Summary & Vision (Tie it back to the mission)

Now, let me walk you through each one using YouTube Shopping as the running example.

The Real Problem YouTube Shopping Is Trying to Solve

Right now, this is what happens on YouTube:

User watches a "Best Laptops 2025" video

↓

Sees a laptop they like at 4:32 in the video

↓

Has to LEAVE YouTube to Google the product name

↓

Goes to Amazon/Best Buy to buy it

↓

YouTube gets $0. Google gets $0.

YouTube is the world's biggest product discovery platform and it captures almost none of the purchase.

That's the business problem. YouTube wants to own the entire journey — from discovery → consideration → purchase — without the user ever leaving.

Step 1 — Start With the Business Why (Not the Feature)

Hot take: Most candidates lose the interview in the first 60 seconds by jumping to solutions.

Before you say anything about AI, anchor the conversation to business reality. Ask 2–3 scoped questions:

"Are we focused on a specific geography or platform?"

"What's the timeline — 3-month MVP or 3-year vision?"

"Is the primary goal revenue growth, engagement, or competitive defense?"

Then define two things out loud:

What to State | Example (YouTube Shopping) |

|---|---|

North Star Metric | Grow Gross Merchandise Value (GMV) |

Unfair Advantage (MOAT) | 800M hours of video data + Google Merchant Center |

The Superpower: Integration with Google Merchant Center (2B+ SKUs) and Gemini’s multi-modal reasoning (the ability to 'watch' and 'understand' video pixels)."

The "unfair advantage" framing is intentional. It signals you're not just a PM — you're a strategist who understands moats. Now, let’s get a quick overview of what is Grow Gross Merchandise Value (GMV)?

GMV is the is the total "cash" value of everything sold on a platform before any expenses are taken out. It is is the sum of all price tags of items sold during a specific time. Let’s take an example, Imagine you run a platform where neighborhood kids sell lemonade:

Kid A sells 10 cups for $2 each.

Kid B sells 5 cups for $4 each.

Total GMV = $40 ($20 + $20).

Even if you (the platform owner) only take a $1 commission from each kid, your GMV is still $40, while your Revenue is only $2.

Why GMV matters?

It measures "Vibrancy": High GMV means your platform is busy and people are actually buying things. Investors love to see GMV growing because it proves "Product-Market Fit."

It ignores "The Mess": GMV does not subtract:

Returns/Refunds: If a customer returns a $100 jacket, the GMV still shows that $100 sale happened.

Discounts: If a $50 item is sold for $30 with a coupon, many companies still report the GMV as $50.

Fees: It doesn't account for shipping, taxes, or your own profit.

Step 2 — Pick ONE User - Not Everyone.

The #1 mistake I have seen is trying to design for "all users." Interviewers hate it as it signals shallow thinking.

Map the ecosystem first, then ruthlessly narrow:

Ecosystem: Creators → Viewers → Brands → Advertisers

Your pick: The "High-Intent Researcher"

→ Watches 20+ min of reviews before buying

→ Highest purchase intent, lowest friction to convert

→ Moves GMV directly

Why this person? Because they're already doing the work — they just need the platform to close the loop. That's your leverage point.

Step 3 — Find the Pain Only AI Can Solve

Don't list generic pain points. At least one must be a technical/contextual problem that AI uniquely fixes. This is what separates junior from senior framing.

For the Researcher, the current experience is broken in three ways:

Identification Friction: The viewer sees a specific item at 04:22 (e.g., a specific shade of lipstick or a camera lens) but can’t find the exact SKU in a cluttered description box.

Information Asymmetry (Technical): The viewer has a 'Will it work for me?' question (e.g., 'Is this mic compatible with my iPad?') that is buried somewhere in a 20-minute video or 500 comments.

The Dead-End Link: Creators rarely update their 'back-catalog.' A 2-year-old viral video often points to discontinued products, leading to a zero-conversion dead end."

Pain Point | User Quote | AI-Solvable? |

|---|---|---|

Identification Friction | "I see the product but can't find the SKU" | ✅ Yes — visual recognition |

Cognitive Overload (Information asymmetry) | "I read 100 comments to figure out if this laptop handles coding" | ✅ Yes — semantic summarization |

Stale Links | "The link in the description is for a discontinued model" | ✅ Yes — dynamic inventory sync |

Step 4 — Propose 3 AI-First Bets (Have a Vision)

This is where you show ambition. Don't just pitch the features. Pitch Pillars or bets— where all pillars are independent but can be layered eventually. However, we can not build all the pillars at once, so we should focus on showing prioritization. But the vision is that they stack on top of each other into one coherent system.

Now, each pillar solves a different problem. One pillar does not solve all three problems. Each pillar is a direct response to one specific pain point.

Pillar | What It Is | Which Pain Point It Solves |

|---|---|---|

Pillar 1: Multi-modal Auto-tagging | Vision + Audio AI scans videos and tags products automatically | Identification Friction — "I see it but can't name the SKU" |

Pillar 2: Gemini Concierge | An AI that watches the video with you and surfaces products in context | Cognitive Overload — "I have to read 100 comments to know if this laptop is good for coding" |

Pillar 3: Self-Healing Supply Chain | Tags sync dynamically with real-time inventory | Stale Links — "The link in the description is for a discontinued model" |

Why They're Separate (Not Layered)

They operate at different layers of the stack:

Pillar 1 — INFRASTRUCTURE layer

→ Runs in the background, indexes existing content

→ No user interaction needed

→ Think: a crawling/indexing job

Pillar 2 — EXPERIENCE layer

→ Real-time, user-facing, interactive

→ Requires a live session with a user watching

→ Think: a product surface

Pillar 3 — ECOSYSTEM layer

→ Connects to external systems (inventory, merchants)

→ Depends on Pillar 1 tags existing first

→ Think: a data pipeline to third parties

They have completely different build timelines and teams:

Pillar | Who builds it | Timeline | Dependency |

|---|---|---|---|

Auto-tagging | ML + Data Infra | 3–6 months MVP | None — can start now |

Gemini Concierge | Product + AI/UX | 12–18 months | Needs Pillar 1 tags to work well |

Self-Healing Supply Chain | Platform + Partnerships | 18–24 months | Needs Pillar 1 + merchant API integrations |

So, in the longer run they absolutely should be layered eventually. However, that's the point of the long years Vision step.

Phase 1: Ship Pillar 1 (Auto-tagging)

↓

Tags exist on videos → foundation is set

Phase 2: Ship Pillar 3 (Self-Healing Supply Chain)

↓

Tags are now live AND accurate → trust is built

Phase 3: Ship Pillar 2 (Gemini Concierge)

↓

Concierge has clean data to work with → no hallucinations

Result: The "Living Catalog" — every pixel is actionable

Step 5 — Prioritize With RICE (Show You Live in the Real World)

Vision without prioritization is just wishful thinking. Score your three pillars:

Pillar | Reach | Impact | Confidence | Effort | Winner? |

|---|---|---|---|---|---|

Multi-modal Auto-tagging | High | High | Medium | Medium | ✅ Yes |

Gemini Concierge | Medium | High | Low | High | ❌ Later |

Self-Healing Supply Chain | Medium | Medium | High | Low | 🔄 Phase 2 |

Reach: How many videos/users will this touch?

Impact: How much will this move the North Star (GPV/GMV)?

Confidence: How sure are we that the AI won't hallucinate?

Effort: Is this a 3-month MVP or a 2-year R&D project?

My call: Start with auto-tagging. It has the widest reach, leverages Google's existing Computer Vision infrastructure, and generates returns on content that's already sitting on the platform. You're not building from scratch — you're unlocking latent value.

The Concierge is exciting but risky. Ship it second, when you have the accuracy data to back it.

The Winner: "Based on RICE, I’ll prioritize the Multi-modal Auto-tagging because it has the highest Reach and leverages our existing Computer Vision moat."

Step 6 — Address AI-Specific Risks

Here's the honest truth: most PM candidates completely skip this step. That's exactly why it's where you win the interview.

AI introduces failure modes that traditional products don't have. Name them directly.

Risk 1: Hallucinations

"What if the AI tags the wrong product and sends users to a competitor?"

Mitigation: Human-in-the-loop. Add a "Verify Tags" button in YouTube Studio. Creators catch errors before they go live. Trust is preserved.

Risk 2: The Creepiness Factor

"What if users feel surveilled because AI knows what they want before they ask?"

Mitigation: Intent-based UI. Shopping tags only surface when the AI detects the user is in "research mode" — based on session behavior, not passive profiling. Opt-in framing, not opt-out.

Naming these risks doesn't weaken your pitch. It proves you've thought through the full product lifecycle.

Step 7 — Close With Clarity and a 5-Year Vision

Don't end by summarizing your features. End by connecting back to the mission.

The recap (30 seconds):

"We started with the goal of owning the 'Consideration' phase of the purchase funnel. The AI Auto-tagging MVP solves the identification gap for high-intent researchers while unlocking revenue from YouTube's existing back-catalog — content that currently generates zero commercial yield."

The 5-year vision (one sentence):

"This moves YouTube from a discovery platform to a Living Catalog — where every frame of every video is commercially actionable."

One sentence. Memorable. Ties everything together.

The Honest Take

Most AI PM interviews aren't hard because the questions are complex. They're hard because candidates default to feature-level thinking when the interviewer is testing for strategic-level thinking.

This framework works because it forces you to answer a different question first: "Why does this matter to the business?" — before you ever say the word "feature."

Use it. Customize it. Make it yours.