I still remember the teams message that gave me a very hard time. It was regarding one of my Agentic AI application that “Hey, the AI bot is acting weird today and responses are not coming in the required format and are shorter as well”.

It made me intrigued that we haven't deployed anything new and the prompt was untouched. There was no change in any other setting and I made sure that temperature was still at 0. Nothing had changed.

And yet, everything had changed.

This message came after three weeks of launch. Three weeks of me confidently telling stakeholders, "The system is stable and we are getting good results." But, I was wrong.

This is the story of how my "perfect" prompt failed in ways that I never anticipated—and what I learned about building AI systems that don't fall apart when real users touch them.

The Three Engineering Pillars

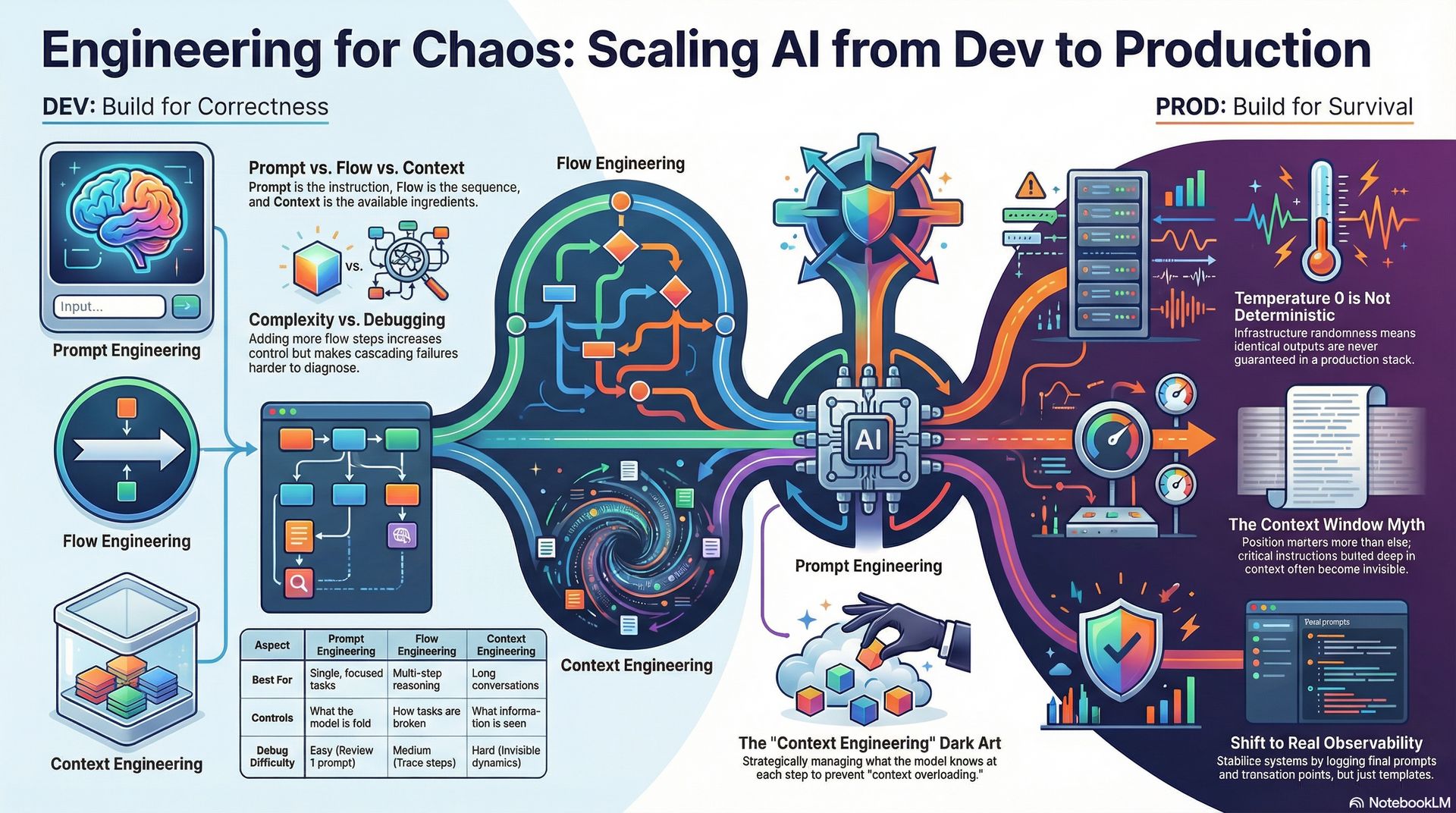

Before I tell you how everything fell apart, let’s understand these concepts - Prompt Engineering, Flow Engineering and Context Engineering

Prompt Engineering: The Foundation Everyone Talks About

What it is: Giving the instructions to the model with actual words, structure, and examples in your prompt.

This is generally how we frame the prompt:

Wrote clear, specific instructions

Used structured formatting (XML tags, markdown)

Added few-shot examples showing desired behavior

Tuned system messages for role and tone

Controlled parameters like temperature and max tokens

Example prompt:

You are an expert analyst. Follow these steps:

1. Analyze the user's question

2. Break down the problem into components

3. Provide reasoning for each step

4. Give a final recommendation

<examples>

User: Should I invest in Company X?

Assistant: Let me analyze this systematically...

[reasoning steps]

Final recommendation: Based on...

</examples>

Now analyze: {user_question}

But, to make AI Agents applications, prompt engineering is not sufficient. We need to design the entire interaction flow between the user, the LLM, tools and memory, so the system behaves predictably in production. And here comes the flow engineering.

Where it fails in production:

Can't adapt to unexpected inputs

Degrades silently with model drift

Assumes a controlled environment that doesn't exist

Flow Engineering: Orchestrating Multi-Step Intelligence

What it is: Designing the sequence of LLM calls and logic that breaks complex tasks into manageable steps—like building a workflow where each step feeds into the next.

The difference from prompt engineering:

Prompt engineering = What you say in one interaction (one giant prompt)

Flow engineering = How multiple interactions work together (multistep LLM interaction)

Each step was its own specialized prompt. Each had a specific job and more controlled as it:

✓ Break down complexity into simple subtasks

✓ Each step could be optimized independently

✓ Easier to debug (know exactly where things break)

✓ Could add validation between steps

✓ More control over the reasoning process

Example:

User Query

↓

Step 1: Intent Classification

↓

Step 2: Information Gathering (if needed)

↓

Step 3: Analysis (using gathered info)

↓

Step 4: Response Generation

↓

Step 5: Quality Check

↓

Final Output

Instead of one massive prompt saying "analyze this investment opportunity," we have:

Classifier prompt: "Is this a fundamental analysis question, technical analysis question, or risk assessment question?"

Data gathering prompt: "Based on the classification, what information do we need?"

Analysis prompt: "Given this data, perform [specific type] of analysis"

Synthesis prompt: "Combine these analyses into a coherent recommendation"

Each step was 200 tokens instead of one 800-token monster.

The trap it can make you fall: More steps = more points of failure. When one step drifted, the entire flow collapsed in ways we don’t anticipate.

Where it fails in production:

Each step is a potential point of drift

Cascading failures across steps are hard to diagnose

Context accumulates and bloats as flow progresses

Steps can succeed individually but fail collectively

Context Engineering: Making the Model Remember What Matters

This is the dark art nobody talks enough about.

What it is: It is the art and science of deciding what information goes in the model, when and in what form so LLM can make the right decision consistently.

The difference from the other two:

Prompt engineering = The instructions themselves

Flow engineering = The sequence of instructions

Context engineering = Strategically managing what information the model sees, when it sees it, basically what the model knows at each step

Think of it this way:

Prompt = Recipe instructions

Flow = Cooking steps in order

Context = The ingredients and tools available to the chef

In context engineering, we design what context to include, what context to exclude, how to structure it and how to refresh it over time so that model produces the accurate, grounded, and repeatable outputs.

Type of context we can engineered:

System Context - roles, guardrails, output constraints

User Context - current query, session intent, user preferences

Task Context - instructions, few shot examples, formatting rules

Knowledge Context (RAG) - Docs, Pdfs, APIs, internal data

Temporal Context - what is recent v/s outdated, versioned knowledge

Give high priority - always included

- System instructions

- Current user query

- Relevant conversation history (last 3 turns)

Medium priority - include if space permits

- User preferences

- Domain-specific knowledge

- Previous successful examples

Low priority - include only if needed

- Full conversation history

- Tangential information

- Optional metadata

You should always focus on including relevant, recent and high signal information while doing the context selection. Always put the critical information first than the noisy data while doing context ranking. Summarize the long histories, extract key facts and remove redundancy while doing the context compression. Always separate instructions, examples, retrieved knowledge, and user input. Never mix them for better context isolation. And always do the context validation such as is this the valid context before calling the model.

Context Engineering becomes very important when you add memory. This happened with me because everything was working great until I added the memory. Once I added the memory, Model didn’t answer the question correctly. It happened because

Old conversations dominated the context

New intent was diluted

This wasn’t a prompt problem, but I faced the context overloading. And once I fixed it, everything was back to normal.

The trap I fell into: Context engineering in dev vs. prod were completely different games. My carefully crafted context strategy worked for 200-token user inputs. Real users sent 2000-token rambling queries that broke everything.

Where it fails in production:

User inputs are unpredictable in length

Context window fills up faster than you anticipate

Attention patterns change with model updates

What worked for 5k tokens breaks at 50k tokens

The Comparison: What Each Solves (And Doesn't)

Here's what I learned about when each approach matters:

Aspect | Prompt Engineering | Flow Engineering | Context Engineering |

|---|---|---|---|

Best for | Single, focused tasks | Complex, multi-step reasoning | Long conversations & personalization |

Controls | What the model is told to do | How tasks are broken down | What information the model can see |

Fails when | Task is too complex for one prompt | Steps become interdependent | Context gets too long or noisy |

Scales by | Adding more detail to instructions | Adding more steps to flow | Being smarter about what to include |

Debug difficulty | Easy - one prompt to review | Medium - trace through steps | Hard - invisible token dynamics |

Context Engineering + Flow Engineering = Production Stability

Flow engineering decides when to add context

Context engineering decides what to add

Prompt engineering decides how to instruct

You need all three.

My fatal assumption:

I thought: "If I master all three, production will be reliable."

The truth: These are necessary but not sufficient.

Summary: The Four Hard Truths Nobody Told Me About Production LLMs

Building LLM systems taught me a humbling lesson: what works in development quietly breaks in production—and often in ways you don’t immediately see.

Truth #1: Your Production Prompt Is Not Your Dev Prompt

The prompt I tested was clean and controlled. The prompt that reached the model in production was chaotic:

User inputs were 10× longer

Conversation history and tool outputs bloated the context

Formatting broke due to special characters

Context limits silently truncated critical instructions

Result:

Every step of the flow worked individually, but together they caused cascading failures.

Context engineering shifted from “careful curation” to token-budget triage under pressure.

Truth #2: Temperature = 0 Is Not Truly Deterministic

I assumed temperature 0 meant identical outputs. It doesn’t.

Backend updates

Parallel execution

Infrastructure-level randomness

All introduce subtle variation. You can reduce randomness, not eliminate it. Determinism is an illusion unless the entire stack is frozen.

Truth #3: Model Drift Happens Without Your Permission

Unlike traditional software, LLM behavior can change even when you deploy nothing.

Attention patterns shift

Context weighting changes

Previously reliable instruction ordering stops working

Same prompt. Same structure. Different interpretation.

Model drift quietly broke my context strategy before I even realized it existed.

Truth #4: Long Context Windows Are a Comforting Myth

A large context window doesn’t mean instructions are respected.

Position matters more than size

Recency bias dominates

Critical instructions pushed deep into context become invisible

The model wasn’t failing—it was answering a different question than the one I thought I was asking.

The Comparison That Changed How I Build

In development, I optimized for:

Prompt clarity (prompt engineering)

Task decomposition (flow engineering)

Information structure (context engineering)

But in production, I was hit by:

Prompt drift

Flow cascades

Context chaos

The mindset shift was simple but painful:

Dev mindset: “How do I make this work?”

Prod mindset: “How does this keep working when everything varies?”

What Actually Fixed Production (It Wasn't Better Prompting)

After weeks of firefighting, here's what stabilized our system. Here are the actual ways to fix this problem.

1. Real Observability

We stopped guessing and started logging reality:

Final prompts (not templates)

Token usage, truncation points, and context growth

Per-step flow metrics and context inclusion

Big discovery: 40% of our prompt failures were silent context truncation issues.

Once we saw where tokens were exploding, fixes became obvious—like adding dynamic summarization for oversized user queries.

2. Output Guardrails Over Model Trust

We added:

Schema validation, retries, fallbacks, and quality checks

Validation gates and circuit breakers in flows

Smart context compression and truncation strategies

Result: 60% fewer user-visible failures, not because the model improved—but because the system learned how to handle variance.

3. Drift Detection That Actually Worked

Instead of waiting for users to complain, we:

Ran daily golden test cases

Monitored semantic drift per step (not just end-to-end)

Detected model drift in hours, not weeks

This let us fix only the broken step instead of rebuilding the entire pipeline.

4. Product Metrics Beat Prompt Metrics

I stopped optimizing for “prompt quality” and started tracking:

Task completion

Time to success

Retry rates

User satisfaction

The uncomfortable truth:

A simpler, faster flow with guardrails beat a more accurate but slower one.

Users preferred shorter, faster answers over perfect, exhaustive responses.

We shipped not the best prompt but the product started working.

Production LLM systems don’t fail because prompts are bad. They fail because we design for control—but deploy into chaos.

Dev is about correctness.

Production is about survival under variance.

Once we will accept that, everything about how AI systems are built will be changed.

Now, It’s the interview question preparation time. What kind of questions, you can get and how you will handle those questions.

1. "How do you distinguish between Prompt, Flow, and Context Engineering when designing an AI Agent?"

• The Goal: To see if you understand that AI development is more than just writing a "perfect" prompt.

• How to Handle This:

◦ Use the "Cooking" analogy: Prompt engineering is the recipe instructions (what the model is told to do); Flow engineering is the cooking steps in order (the sequence of instructions); and Context engineering is the ingredients and tools available (what information the model sees).

◦ Emphasize that while prompt engineering is the foundation, Flow engineering is necessary to break complex tasks into manageable subtasks, making them easier to debug and optimize independently.

◦ Explain that Context engineering is the "dark art" of strategically deciding what data to include or exclude so the model makes consistent decisions.

2. "A model that was performing perfectly in development is now acting 'weird' or failing in production, even though no code was changed. What is your first step?"

• The Goal: To test your understanding of Model Drift and production variance.

• How to Handle This:

◦ Acknowledge that temperature = 0 is not truly deterministic; backend updates or infrastructure-level randomness can introduce variation even if the prompt is untouched.

◦ Mention Model Drift: LLM behavior can change without a new deployment because attention patterns and context weighting shift.

◦ Propose a solution centered on Real Observability: You would move from reviewing templates to logging final prompts and token usage to identify issues like silent context truncation.

3. "How do you manage a situation where user inputs are significantly longer than what you tested for in development?"

• The Goal: To evaluate your strategy for Context Engineering and resource management.

• How to Handle This:

◦ Explain that real-world users often send "rambling queries" that can break a system optimized for short inputs.

◦ Detail a Context Prioritization strategy: Always include high-priority items like system instructions and the current query, while summarizing or compressing low-priority items like full conversation history.

◦ Suggest technical fixes like dynamic summarization for oversized queries and context isolation (separating instructions from retrieved knowledge) to prevent the model from getting confused.

4. "When scaling an AI product, how do you decide between a single 'master prompt' and a multi-step 'flow'?"

• The Goal: To see if you understand the trade-offs between complexity and reliability.

• How to Handle This:

◦ Argue for Flow Engineering because "monster prompts" fail to adapt to unexpected inputs and degrade silently.

◦ Highlight the benefits of multi-step flows: You can validate outputs between steps and optimize each sub-prompt (e.g., using 200 tokens instead of 800) for better control.

◦ Caveat the answer by noting that more steps = more points of failure; if one step drifts, the entire flow can collapse (cascading failure).

5. "What metrics do you prioritize for a production AI agent? Is 'accuracy' the most important one?"

• The Goal: To see if you have a "Production Mindset" focused on user value rather than just technical benchmarks.

• How to Handle This:

◦ Explain that Product Metrics often beat Prompt Metrics: Instead of just "prompt quality," track Task Completion, Time to Success, and Retry Rates.

◦ Mention that users often prefer shorter, faster answers that are reliable over perfect, exhaustive responses that take too long.

◦ Discuss the importance of Output Guardrails: Using schema validation and circuit breakers allows the system to handle variance even when the model itself isn't "perfect".

6. "How do you handle the 'Lost in the Middle' problem where a model ignores instructions placed in the middle of a large context window?"

• The Goal: To debunk the myth that long context windows solve all information retrieval problems.

• How to Handle This:

◦ State clearly that long context windows are a myth; a model might not respect instructions just because they fit in the window.

◦ Point out that position matters more than size: Recency bias dominates, and instructions pushed deep into the context often become invisible.

◦ Solution: Use Context Ranking to put critical information first and remove redundant data through context compression.